Over 5 years ago, around the time

BPEL4WS came out,

WSCI was released. Because this was just at the peak of the IBM/MSFT versus everyone else wars the uneducated observer saw this as yet another attempt by Oracle et al to fight the beast. However,

WSCI was a far different type of animal. It went to W3C under the guise of the WS-Choreography group and eventually

WS-CDL was born. WS-CDL is one of those standards that I've been observing from a far: I was involved at the periphery in the early years of

WS-T and

OASIS WS-CAF, because of the influence of transactions (and context), but apart from being on the mailing list I've had no further input. But WS-CDL is also one of the nicest, most powerful and most under-rated standards around. So although I've been watching from a far, I've also been waiting for the right moment to use its power in whatever way makes sense.

In

Arjuna there were limited opportunities for this. I tried with a few of our engagements, but the "Why do I want this when there's BPEL?" question kept coming up. As a result in some ways WS-CDL is also one of the most frustrating standards: you really want to shake the people who ask those questions and force them to read up on WS-CDL rather than believe the anti-publicity. I also wish that some of the bigger players involved in its development had done a lot more. Oh well, such is life in the standards world.

However, once I joined

JBoss and was put in charge of the JBossESB development, things started to change. Here was a great opportunity! Here was a distributed system that was under development, would use Web Services and SOA at its heart, would be about large scale (size of participants and physical locality) and where you simply did not control all of the infrastructure over which applications ran.

Now many people in our industry ignore formal methods or pay lip service to them, believing they are only of use to theoreticians. Unfortunately until that changes Computer Science will always be a "soft" science: more an art than anything. That's not a good thing because it limits efficiency. In a local application (everything on one machine) you can get away with cutting some corners. But in a distributed system, particularly one that needs to be fault tolerant, it's worse. For example, how do you prove the correctness of a system when you cannot reason about the ways in which the individual components (or services) will act given specific expected (or unexpected) stimuli? Put another way, how can you ensure that the system behaves as expected and continues to do so as it executes, especially if it has non-deterministic properties? As the complexity of your application increases, this problem approaches being NP-complete.

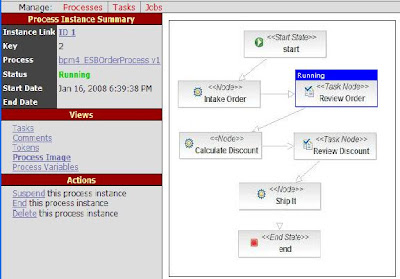

Rather than just throwing together an "architecture" diagram and developing services in relative isolation, and trusting to luck (yes, that's often how these things are developed in the real world), we decided that something better had to exist for our customers. Now there are formal ways of doing this using PetriNets, for example. WS-CDL uses Pi-Calculus to help define the structure of your services and composite application; you can then define the flow of messages between them, building up a powerful way in which to reason effectively about the resultant. On paper the end result is something that can be shown to be provably correct. And this is not some static, developer-time process either. Because these "contracts" between services work in terms of messages and endpoints, you can (in theory) develop runtime monitoring that enhances your governance solution and is (again) provably correct: not only can you reason successfully about your distributed system when it is developed and deployed initially, but you can continue to do so as it executes. A good governance solution could tie into this and be triggered when the contract is violated, either warning users or preventing the system from making forward progress (always a good thing if a mission-critical environment is involved).

Now this is complex stuff. It's not something I would like to have to develop from scratch. Fortunately for JBoss (now

Red Hat), there's a company that develops software that is based on WS-CDL:

Hattrick Software, principle sponsors of the

Pi4 Technologies Foundation. Fortunately again, one of the key authors of the standard (my friend/colleague

Steve Ross-Talbot) is both CTO of Hattrick Software and Chair of the Pi4 Technologies Foundation. Steve has been

evangelizing WS-CDL for years, pushing hard against those doors that have "Closed: Using BPEL. Go Away." signs on them. We're starting to see the light as users see the deficiencies of BPEL (the term "Use the right tool for the right job" keeps coming to mind) and look for solutions. But it's been a long, slow process. But when like minds come together, sometimes "something wonderful" can happen. People at Hattrick have been working with us at Red Hat for several months, to integrate their product (which is open source and lets you develop scenarios to test out your deployments and then monitor them for correctness once they go live) with JBossESB and the SOA Platform. This work is almost complete and it will offer a significant advantage to both companies customers.

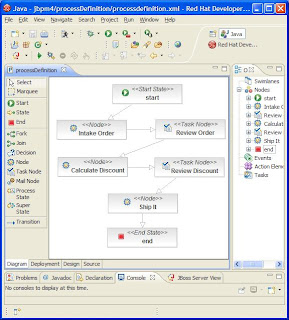

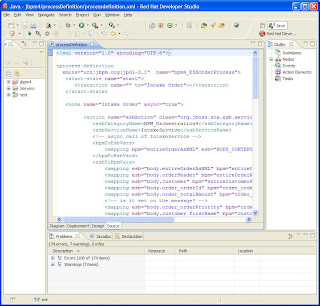

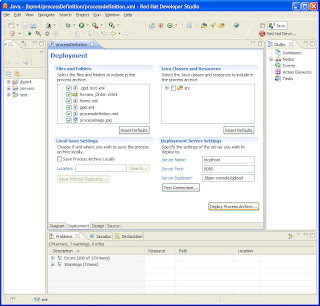

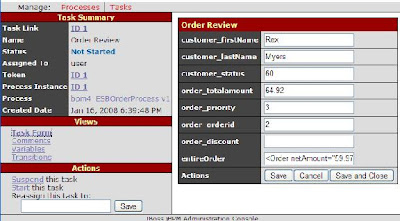

I can't really go into enough detail here to show how good this combination is, but here are a few example images. The example scenario is a distributed auction, with participants including a seller and several buyers. With the WS-CDL tooling you can define your scenarios (the interactions between parties) like this

You can then use the tool to define the roles and relationships for your application:

And then you can dive down into very specific interactions such as credit checking:

Or winning the auction:

We're hoping to offer a free download of the Hattrick Software for the SOA Platform in the new year, and maybe even for the ESB. Since it's all Eclipse based, it should be relatively straightforward to tie this into our overall tooling strategy as well, providing a uniform approach to system management and governance. But even without this, what this combination offers is very important: you can now develop your applications and services and prove they work before deployment. Furthermore, in the SOA world of service re-use, where you probably didn't develop everything, a suitable WS-CDL related contract for each service should allow developers to re-use services in a more formal manner and prove a priori that the composite application is still correct, rather than doing things in the ad hoc manner that currently pervades the industry.