Wednesday, November 26, 2008

Smooks on InfoQ

Our very own Tom Fennelly has had a great article on Smooks published on InfoQ. Take a look.

Friday, November 21, 2008

Stonehenge

We just helped start Apache Stonehenge. As I've said elsewhere several times, interoperability is very important for Web Services, so hopefully this will help the industry as a whole as well as our projects.

Friday, October 31, 2008

SOA Platform 4.3 is released!

We're pleased to announce that version 4.3 of our SOA Platform has just been released. We've made quite a few improvements since the first release, including closer integration with JON to provide runtime governance of services, improved Web Services support, better performance, reliability etc. SOA-P 4.3 GA is based on JBossESB 4.4 CP1, so you can grab the source from the repository. As always, give us feedback so we can improve it even more in subsequent releases!

Friday, October 24, 2008

Wise as a JBoss project

We've been working with the Wise project for a while and it's offered us some really nice capabilities. So it's with great pleasure that I can now announce that Wise has moved to become a JBoss Project. Welcome to the entire Wise community! I'm sure we've all got great things ahead!

Wednesday, October 15, 2008

Evaluating & Getting Started with JBoss ESB

I am personally a learn by example, learn by demonstration kind of person. I prefer to see the technology running before I'm willing to invest time into downloading it, reading the documentation and trying it out. The following items offer a great introduction into the capabilities of the JBoss ESB. Please review them and if you have questions get after us in the online forums.

1) jBPM and ESB 4.2 Demo: http://www.redhat.com/v/webcast/SOAdemo2/SOADemo2.html

A Flash-based recording of just the demonstration portion of the Apr 17th webinar. This is your best starting point if you only have 17 minutes for an immersion into SOA the JBoss way. This demo includes the Spring AOP trick of "eavesdropping" (aka wiretap) on the JPetstore so that the order is routed through the ESB and into a jBPM process which orchestrates the services & manages the human tasks. Drools handles the business logic and Smooks takes care of the XML to POJO transformation. We even show a bit of the JBoss Tools project which has an jboss-esb.xml editor.

2) ESB 4.4 and SOA Platform 4.3 - Deep Dive Webinar

(Oct 2 2008)

This presentation focuses on what's new and cool in the latest versions of JBoss ESB and has live demonstrations of:

- multi-language scripting: a service created via JRuby, Jython, Beanshell, and Groovy

- an Excel spreadsheet for service business logic (decision tables from Drools)

- a declarative model for Web Services & XSD validations, including Visual Basic.NET consumption

- a declarative model for service security based on JAAS

- JBoss Operations Network (JON) management & monitoring tools which now available at: http://www.jboss.org/jopr

Note: Some of these demonstrations require the use of trunk in the JBoss ESB SVN.

3) ESB 4.2 & SOA Platform 4.2 - Deep Dive Webinar

(Apr 17 2008)

SOA: Serivce Oriented Agility - this session introduces the IT challenges that SOA and an ESB address and focuses on the demonstration of how jBPM, Drools & JBoss ESB can be used synergistically to create a service oriented and agile solution. The demonstration portion is same as above, however, there is valuable content in the general presentation and Q&A session.

1) jBPM and ESB 4.2 Demo: http://www.redhat.com/v/webcast/SOAdemo2/SOADemo2.html

A Flash-based recording of just the demonstration portion of the Apr 17th webinar. This is your best starting point if you only have 17 minutes for an immersion into SOA the JBoss way. This demo includes the Spring AOP trick of "eavesdropping" (aka wiretap) on the JPetstore so that the order is routed through the ESB and into a jBPM process which orchestrates the services & manages the human tasks. Drools handles the business logic and Smooks takes care of the XML to POJO transformation. We even show a bit of the JBoss Tools project which has an jboss-esb.xml editor.

2) ESB 4.4 and SOA Platform 4.3 - Deep Dive Webinar

(Oct 2 2008)

This presentation focuses on what's new and cool in the latest versions of JBoss ESB and has live demonstrations of:

- multi-language scripting: a service created via JRuby, Jython, Beanshell, and Groovy

- an Excel spreadsheet for service business logic (decision tables from Drools)

- a declarative model for Web Services & XSD validations, including Visual Basic.NET consumption

- a declarative model for service security based on JAAS

- JBoss Operations Network (JON) management & monitoring tools which now available at: http://www.jboss.org/jopr

Note: Some of these demonstrations require the use of trunk in the JBoss ESB SVN.

3) ESB 4.2 & SOA Platform 4.2 - Deep Dive Webinar

(Apr 17 2008)

SOA: Serivce Oriented Agility - this session introduces the IT challenges that SOA and an ESB address and focuses on the demonstration of how jBPM, Drools & JBoss ESB can be used synergistically to create a service oriented and agile solution. The demonstration portion is same as above, however, there is valuable content in the general presentation and Q&A session.

Wednesday, September 24, 2008

JBossESB customer visit

I've been spending a fair bit of time recently talking with and visiting customers. Last week it was Amsterdam where we had a really good couple of days. It was fun and informative to meet everyone.

Monday, August 11, 2008

Zero-code Web Services addition

We've had Web Services support in JBossESB for a long time (relative to the age of the project), but it has often required users to code more than they may have liked. Well in recent months we've been working more and more with Stefano Maestri of the wise project to improve things. Stefano has just posted a blog entry on where things now stand with the latest ESB 4.4 release. Well worth taking a look at the posting as well as the ESB!

JBossESB wins InfoWorld Bossie award

It's always nice to get recognition from others in the industry and particularly from InfoWorld. So congratulations to everyone involved in the ESB and SOA Platform efforts!

Monday, July 7, 2008

Smooks released as one of the "Open-Source-Tools" in JavaMagazine

Not sure how "big" this German magazine is, but anyway... their latest edition includes Smooks as one of the distributed "Open-Source-Tools". See article ("Leser-CD" tab).

Saturday, June 21, 2008

Writing Integration Tests with the AbstractTestRunner

Writing integration tests around your ESB Services can be a bit of a pain in the head under the current 4.x codebase (5.0 will be fixing many of these issues). A number of resources are managed statically. This is "OK" under normal running conditions, but a major head wrecker when trying to write multiple isolatable tests i.e. tests where you can easily dump/create clean environments for each test, without one test magically screwing with another.

A while ago I created the ESBConfigUtil test utility class (dumb name), which went some way towards making it a little easier to write tests, but was still a bit painfull. The latest addition is the AbstractTestRunner class, which I think is a bit better again. It wraps the ESBConfigUtil class, setting up the test env (ESB deployment, Registry, ESB properties ala the beloved PropertyManager), starting the ESB Controller, running the test and then shutting down the Controller and ensuring that the test env is returned to it's state from before the test run (or at least tries to ;-) ), which hopefully helps to avoid leakage between tests.

The AbstractTestRunner tries to allow you to write Integration level tests more easily. You can define a full ESB configuration and run it through the ESB Controller class, run tests (invoke endpoints etc).

As an example from the InVM transport tests, we have the following ESB configuration named "in-listener-config-01.xml":

Writing some test code around this, with the configuration deployed in the ESB Controller (quite near to the full running env):

So as you can see, the test method creates an anonymous instance of the AbstractTestRunner class. It implements the "test" method, in which the actual test code is placed. The Service config file is set on the anonymous instance through the setServiceConfig method (the String param version of this method looks up the resource using getClass().getResourceAsStream()). The ESB properties file can be set through the setEsbProperties method (supports the same mechanisms as the setServiceConfig method). Both setServiceConfig and setEsbProperties methods return the instance of the anonymous inner class (i.e. "return this;"), so you can string the config calls together e.g.:

Calling the run() method:

Would be great if people could try it in their tests so we can evolve it and fix any issues. I hope it can make integration testing a bit easier in the 4.x codebase.

A while ago I created the ESBConfigUtil test utility class (dumb name), which went some way towards making it a little easier to write tests, but was still a bit painfull. The latest addition is the AbstractTestRunner class, which I think is a bit better again. It wraps the ESBConfigUtil class, setting up the test env (ESB deployment, Registry, ESB properties ala the beloved PropertyManager), starting the ESB Controller, running the test and then shutting down the Controller and ensuring that the test env is returned to it's state from before the test run (or at least tries to ;-) ), which hopefully helps to avoid leakage between tests.

The AbstractTestRunner tries to allow you to write Integration level tests more easily. You can define a full ESB configuration and run it through the ESB Controller class, run tests (invoke endpoints etc).

As an example from the InVM transport tests, we have the following ESB configuration named "in-listener-config-01.xml":

<?xml version = "1.0" encoding = "UTF-8"?>

<jbossesb xmlns="http://anonsvn.labs.jboss.com/labs/jbossesb/trunk/product/etc/schemas/xml/jbossesb-1.0.1.xsd">

<services>

<service category="ServiceCat" name="ServiceName"

description="Test Service">

<actions mep="RequestResponse">

<action name="action"

class="org.jboss.soa.esb.mock.MockAction" />

</actions>

</service>

</services>

</jbossesb>

Writing some test code around this, with the configuration deployed in the ESB Controller (quite near to the full running env):

public void test_async() throws Exception {

AbstractTestRunner testRunner = new AbstractTestRunner() {

public void test() throws Exception {

ServiceInvoker invoker =

new ServiceInvoker("ServiceCat", "ServiceName");

Message message = MessageFactory.getInstance().getMessage();

message.getBody().add("Hi there!");

invoker.deliverAsync(message);

sleep(50);

assertTrue(message == MockAction.message);

}

}.setServiceConfig("in-listener-config-01.xml");

testRunner.run();

}

So as you can see, the test method creates an anonymous instance of the AbstractTestRunner class. It implements the "test" method, in which the actual test code is placed. The Service config file is set on the anonymous instance through the setServiceConfig method (the String param version of this method looks up the resource using getClass().getResourceAsStream()). The ESB properties file can be set through the setEsbProperties method (supports the same mechanisms as the setServiceConfig method). Both setServiceConfig and setEsbProperties methods return the instance of the anonymous inner class (i.e. "return this;"), so you can string the config calls together e.g.:

Of course, you need to call the run() method to run the actual test code. You can do this as above, or you can just string it onto the end of the anonymous inner class:

public void test_async() throws Exception {

AbstractTestRunner testRunner = new AbstractTestRunner() {

public void test() throws Exception {

.... test code....

}

}.setEsbProperties("jbossesb-properties.xml")

.setServiceConfig("jboss-esb.xml");

testRunner.run();

}

public void test_async() throws Exception {

AbstractTestRunner testRunner = new AbstractTestRunner() {

public void test() throws Exception {

.... test code....

}

}.setServiceConfig("jboss-esb.xml").run();

}Calling the run() method:

- Parses the Service configuration, creating the Controller instance.

- Installs the specified ESB properties (recording the currently installed properties)

- Installs and configures the Registry.

- Starts the Controller

- Calls the "test()" method to run the test code.

- Stops the Controller

- Uninstalls the Registry.

- Resets the ESB properties to their pre-test state.

Would be great if people could try it in their tests so we can evolve it and fix any issues. I hope it can make integration testing a bit easier in the 4.x codebase.

Wednesday, June 18, 2008

Complex Splitting, Enriching and Routing of Huge Messages

ESB Administrators sometimes have a requirement to split message payloads into smaller messages for routing to one or more Service Endpoints (based on content: Content Based Routing). Add Endpoint based Transformation, Message Enrichment and the fact that these messages can be Huge in size (GBs)... you end up with some interesting problems that need solving!

I've just added a new Quickstart to the 4.4 codebase that demonstrates one approach (set of approaches) to solving some of these issues with JBossESB. I uploaded the readme, so people can read the details (as well as look at the flash demo) without going to too much trouble.

I've just added a new Quickstart to the 4.4 codebase that demonstrates one approach (set of approaches) to solving some of these issues with JBossESB. I uploaded the readme, so people can read the details (as well as look at the flash demo) without going to too much trouble.

Saturday, May 31, 2008

JBossESB 4.3 is released

In case you hadn't noticed, the team released JBossESB 4.3. This is the first community release since the SOA Platform came out and contains many of the great features available there, including InVM transport, Smooks 1.0 support, more BPM specific quickstarts, performance improvements, support for the SOA Software registry as a replacement for jUDDI and many many more. But most of all this version has had much more stress testing applied to it. So if you're using an earlier version of JBossESB then we recommend you give this release a try!

Wednesday, May 14, 2008

Monday, May 5, 2008

Come meet us at JavaOne

As well as having a presence in the Pavilion, we're presenting at a couple of sessions this year. The first is a panel session around SCA/OpenCSA standardisation efforts and the second is a BOF on OSGi and SOA (very relevant to our ESB 5.0 architecture). If you're around and just want to say 'hi', come on over.

Something I forgot to mention earlier, but we were also represented on the Program Committee for JavaOne this year: a interesting experience and definitely something to repeat if possible.

Something I forgot to mention earlier, but we were also represented on the Program Committee for JavaOne this year: a interesting experience and definitely something to repeat if possible.

Saturday, April 12, 2008

Smooks improvements and futures

If you're a JBossESB user then you've probably used Smooks, which is our default transformation engine. Unlike other ESBs that may only give you XSLT based transformations (perhaps as an afterthought to their architecture), Smooks provides much more than this, and transformation has been at the heart of our architecture since the start. Anyway, Mr Smooks (aka Tom) has been describing the future for Smooks and within our ESB. Definitely worth a read. It's nice to see that others are getting in on the act. What's that they say about imitation and flattery ;-)?

Friday, April 11, 2008

Cloud Computing and the ESB

Whether you call it Cloud Computing, Grid, utility computing, ubiquitous computing, or just large scale distributed systems, computing devices are everywhere. These days I have a mobile phone that is more powerful (processor and memory) than the laptop I had 10 years ago. I suspect my fridge is more powerful than my old BBC Model B computer! Having spent a lot of time working in large-scale distributed systems, the basic requirements are pretty similar: develop objects/services, publish, locate and use. Doing this in a transport agnostic manner is very important as well: how many services running on a mobile phone support JMS? But as a service developer do I really need to tie my service to a specific transport? That breaks reusability: the service logic (excluding transport) should be useable in a variety of different deployments, assuming its dependencies on 3rd party bindings are present (e.g., no good deploying a service on a mobile phone if it needs a local Oracle instance to execute!)

What you need to support cloud computing (for want of a better term) is an infrastructure that allows you to develop your services without having to worry about where they will be deployed. Furthermore, if you can compose services from other services and (re-)deploy them dynamically as your requirements change, that would be a benefit. Many service deployments need to change over time, if for no other reason than machines may fail or performance improvements mean that you want to move a service from one machine to another. Or you may need to deploy more (or less) service instances over time to improve availability. Interestingly some of this overlaps with governance.

You also need to be able to cope with dynamic routing (service locations change and you want to route inflight messages or messages sent to the old address, to the new location). Transformations of data are pretty important too: sending Gigabytes of data to my services that has been deployed on the mobile phone might get a bit costly, but maybe my infrastructure can prune that information while it is inflight and only send a synopsis (or some portion of it that is sufficient for the service do do meaningful work).

Ultimately the service deployments and how they are related to the devices that are available to the infrastructure should be handled automatically by the infrastructure, with the capability to override this or provide hints as to more appropriate deployment choices (e.g., "please don't deploy this service on a mobile phone!") The infrastructure then becomes responsible for managing those services, guaranteeing specific SLAs (so it may mean migrating a service from one device to another, for example, to maintain a level of performance or availability), pulling in more devices or distributing more instances of the service across multiple devices, again to maintain policies and SLAs.

Overall the infrastructure and the way it supports development of services assists in the realisation of loosely coupled, non-brittle applications. By this point you may see where I'm going with this. In my opinion a good infrastructure for cloud computing is also a good infrastructure for SOA, i.e., an SOI. In our case, that would be JBossESB as it continues to evolve and improve. Stay tuned!

What you need to support cloud computing (for want of a better term) is an infrastructure that allows you to develop your services without having to worry about where they will be deployed. Furthermore, if you can compose services from other services and (re-)deploy them dynamically as your requirements change, that would be a benefit. Many service deployments need to change over time, if for no other reason than machines may fail or performance improvements mean that you want to move a service from one machine to another. Or you may need to deploy more (or less) service instances over time to improve availability. Interestingly some of this overlaps with governance.

You also need to be able to cope with dynamic routing (service locations change and you want to route inflight messages or messages sent to the old address, to the new location). Transformations of data are pretty important too: sending Gigabytes of data to my services that has been deployed on the mobile phone might get a bit costly, but maybe my infrastructure can prune that information while it is inflight and only send a synopsis (or some portion of it that is sufficient for the service do do meaningful work).

Ultimately the service deployments and how they are related to the devices that are available to the infrastructure should be handled automatically by the infrastructure, with the capability to override this or provide hints as to more appropriate deployment choices (e.g., "please don't deploy this service on a mobile phone!") The infrastructure then becomes responsible for managing those services, guaranteeing specific SLAs (so it may mean migrating a service from one device to another, for example, to maintain a level of performance or availability), pulling in more devices or distributing more instances of the service across multiple devices, again to maintain policies and SLAs.

Overall the infrastructure and the way it supports development of services assists in the realisation of loosely coupled, non-brittle applications. By this point you may see where I'm going with this. In my opinion a good infrastructure for cloud computing is also a good infrastructure for SOA, i.e., an SOI. In our case, that would be JBossESB as it continues to evolve and improve. Stay tuned!

Tuesday, February 19, 2008

JBossWorld 2008 update

Lots of activity at JBossWorld 2008! As you can find elsewhere, this was the biggest JBW ever and all of the presentations I attended were packed. The ESB/SOA Platform related presentations will be uploaded soon and we'll cross post links here as soon as possible. But in the meantime you may be interested in another announcement about the SOA Platform.

Friday, February 15, 2008

SOA-P now available

Yesterday at JBoss World the availability of the JBoss Enterprise SOA Platform was officially announced. I don't know how long this webcast is going to be available but a recording can be found here.

Sunday, February 3, 2008

Tuesday, January 29, 2008

SOA + BPM = Killer Combination

Kurt's recent posting prompted me to put down here what I've been putting off for a while: my thoughts on BPM and SOA. Hopefully it's apparent to everyone that BPM != SOA: they solve different problems. However, that does not mean they cannot compliment each other. The recent work on combining jBPM with JBossESB should illustrate that, but let's go further.

One of the key aspects of SOA is about agility: the ability to react more quickly to changes in the way IT systems need to be deployed and used, as well as being able to leverage existing IT investments. At this level agility is something that is definitely a concern of T-Shirt And Sandle Man, i.e., IT folks and not Men In Suits, i.e., business folks, which is primarily where BPM resides. Where BPM works well is in ensuring that business goals and efficiently aligned with how they are translated into processes, making those processes more reliable, faster and compliant with the businesses policies and practices. Fairly obviously, for business folks, these are just as important as IT agility.

SOA and BPM do not compete with one another: they are natural compliments. A good combination of SOI (Service Oriented Infrastructure) and BPM will make it natural to orchestrate services (or more likely tasks) as second nature within the deployment and not as some bolt-on which is there simply for compliance reasons. I think the combination of SOA and BPM is a "killer app" because:

Remember that SOA is not a product: it does not come in a shrink-wrapped box. So you shouldn't think that it is simply an ESB or some other technology. It is not purely a technology driven initiative. A good SOI will allow the right people in an organisation to take ownership of the components (technology and business) that are important to them. This is one of the reasons why loosely coupled systems work. SOA does not belong to a single group of people (e.g., the typical developer), but to the business as a whole. That's why SOA+BPM work so well together: it is a natural fit.

One of the key aspects of SOA is about agility: the ability to react more quickly to changes in the way IT systems need to be deployed and used, as well as being able to leverage existing IT investments. At this level agility is something that is definitely a concern of T-Shirt And Sandle Man, i.e., IT folks and not Men In Suits, i.e., business folks, which is primarily where BPM resides. Where BPM works well is in ensuring that business goals and efficiently aligned with how they are translated into processes, making those processes more reliable, faster and compliant with the businesses policies and practices. Fairly obviously, for business folks, these are just as important as IT agility.

SOA and BPM do not compete with one another: they are natural compliments. A good combination of SOI (Service Oriented Infrastructure) and BPM will make it natural to orchestrate services (or more likely tasks) as second nature within the deployment and not as some bolt-on which is there simply for compliance reasons. I think the combination of SOA and BPM is a "killer app" because:

BPM promotes a model-based approach to task definitions, which, if used right, should ensure that what the business wants is what they get. Importantly it's defined by the business folks and not by the infrastructure. It encourages a top-down approach to service orchestration and requirements: you don't develop what you don't need. I know this sounds pretty obvious, but you'd be surprised!- BPM and tooling are natural allies, as they are with SOA.

Remember that SOA is not a product: it does not come in a shrink-wrapped box. So you shouldn't think that it is simply an ESB or some other technology. It is not purely a technology driven initiative. A good SOI will allow the right people in an organisation to take ownership of the components (technology and business) that are important to them. This is one of the reasons why loosely coupled systems work. SOA does not belong to a single group of people (e.g., the typical developer), but to the business as a whole. That's why SOA+BPM work so well together: it is a natural fit.

Friday, January 25, 2008

Service Orchestration using jBPM

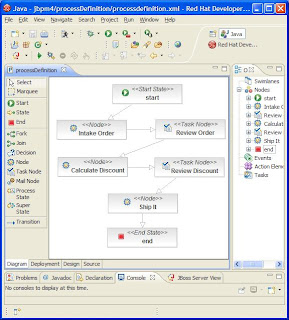

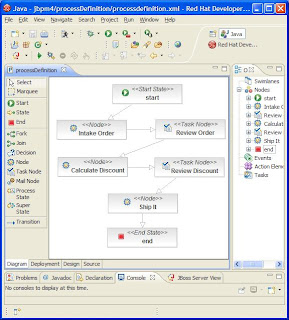

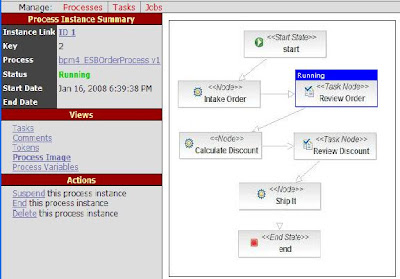

Service Orchestration is the arrangement of business processes. Traditionally BPEL is used to execute SOAP based WebServices, and in the Guide 'Service Orchestration' you can obtain more details on how to use ActiveBPEL with JBossESB. However, if you want to orchestrate JBossESB services regardless of their end point type, then it makes more sense to use jBPM. Figure 1 shows an order process in jBPM Designer view which is part of JBoss Developer Studio. In this post we will describe what it takes for you to start using jBPM for Service Orchestration.

Figure 1. 'OrderProcess' Service Orchestration using jBPM

Step 1. Create the Process Definition

To create the process definition from Figure 1 you drag in components from the left menu bar. To start you'd drag in a Start node, followed by a regular 'node' type, which we gave the name 'Intake Order'. You can now connect the two boxes by selecting a 'transition', and by first clicking on the start node and then on the Intake Order node. Next we need to tell jBPM which JBossESB service backs this node. This is done by attaching a special 'EsbActionHandler' action to the node and configuring it to go out to the service 'InTakeService'. For now you will need to click on the 'source' tab to drop into the XML representation of this node (see Figure 2).

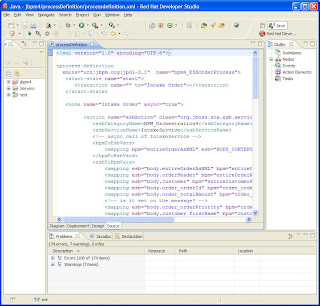

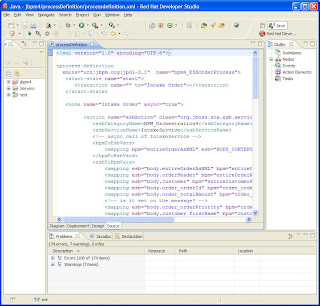

Figure 2. XML Source of the 'OrderProcess' Service Orchestration

In this case the configuration for the Intake Order the XML looks like

The first two subelements esbServiceName and esbCategoryName specifiy the name of the service, where the other two elements: bpmToEsbVars and EsbtoBpmVars specify which variables should be carried from jBPM to the ESB Message and back respectively. Koen Aers has been working on a special EsbServiceNode so all the configuration can be done in design view.

Creation of the rest of the process definition goes in a similar fashion, ending with an 'End' node, which when reached will terminate the process.

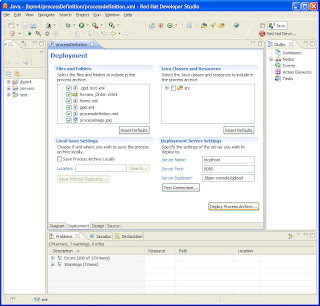

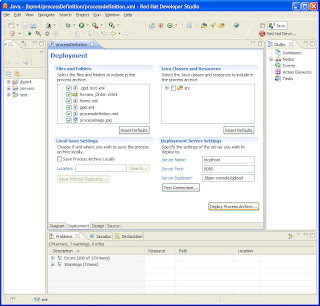

Step 2. Deployment

JBossESB deploys the jBPM core engine in a jbpm.esb archive. This jbpm.esb archive contains the jBPM core engine as well as the configuration and the jbpm-console. To deploy the process click on the 'deployment' tab in the IDE, selecting the files you wish to deploy and clicking on the 'Deploy Process Archive' button.

Figure 3. Deployment of the 'OrderProcess' Service Orchestration

Besides deploying the process definition we also need to deploy the services themselves. Your services are deployed in a yourservices.esb archive. For deployment order you need to specify a depends 'jboss.esb:deployment=jbpm.esb' in the deployment.xml.

Note that deployment of process definitions and esb archives is hot and does not require a server restart.

Step 3. Starting and Running the process

There a multiple ways to start an instance of the process that was deployed in step 2. For instance you can start one from your own code, the jbpm-console, but perhaps the easiest way is to start on using an out-of-the-box service. For instance when your store front receives the order you can create an EsbMessage with the invoice and drop it on the 'StartProcess' service.

You can define a StartProcess service (one for each process definition) in the jboss-esb.xml in yourservices.esb. For instance it can look like

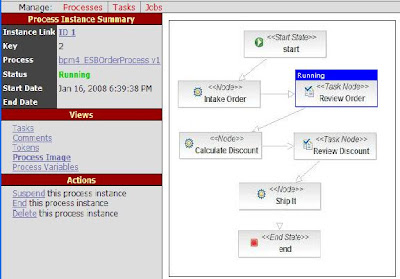

where we use the 'StartProcessInstanceCommand' to invoke a new process instance of the type 'OrderProcess' and with the invocation we insert a business key (invoice ID) and the invoice itself into the jBPM process instance context. Now when a message hits this service a new instance of the shipping process is created and we can look at its state using the jbpm-console (by default running on http://localhost:8080/jbpm-console)

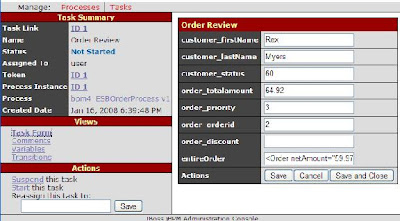

Figure 4. State of an instance of the 'OrderProcess' Service Orchestration

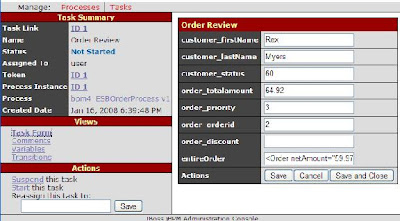

In Figure 4 the 'Review Order' process is highlighted because the process is waiting for a human to review the order. So here we now have integrated human work flow, see Figure 5!

Figure 5. 'Review Order' Human Work Flow Integration

Conclusion

We demonstrated that it is a three step process to use jBPM for Service Orchestration and how to integrate it with Human Work Flow. To get more details you can read the jBPMIntegrationGuide.

Have fun,

--Kurt

Figure 1. 'OrderProcess' Service Orchestration using jBPM

Step 1. Create the Process Definition

To create the process definition from Figure 1 you drag in components from the left menu bar. To start you'd drag in a Start node, followed by a regular 'node' type, which we gave the name 'Intake Order'. You can now connect the two boxes by selecting a 'transition', and by first clicking on the start node and then on the Intake Order node. Next we need to tell jBPM which JBossESB service backs this node. This is done by attaching a special 'EsbActionHandler' action to the node and configuring it to go out to the service 'InTakeService'. For now you will need to click on the 'source' tab to drop into the XML representation of this node (see Figure 2).

Figure 2. XML Source of the 'OrderProcess' Service Orchestration

In this case the configuration for the Intake Order the XML looks like

The first two subelements esbServiceName and esbCategoryName specifiy the name of the service, where the other two elements: bpmToEsbVars and EsbtoBpmVars specify which variables should be carried from jBPM to the ESB Message and back respectively. Koen Aers has been working on a special EsbServiceNode so all the configuration can be done in design view.

Creation of the rest of the process definition goes in a similar fashion, ending with an 'End' node, which when reached will terminate the process.

Step 2. Deployment

JBossESB deploys the jBPM core engine in a jbpm.esb archive. This jbpm.esb archive contains the jBPM core engine as well as the configuration and the jbpm-console. To deploy the process click on the 'deployment' tab in the IDE, selecting the files you wish to deploy and clicking on the 'Deploy Process Archive' button.

Figure 3. Deployment of the 'OrderProcess' Service Orchestration

Besides deploying the process definition we also need to deploy the services themselves. Your services are deployed in a yourservices.esb archive. For deployment order you need to specify a depends 'jboss.esb:deployment=jbpm.esb' in the deployment.xml.

Note that deployment of process definitions and esb archives is hot and does not require a server restart.

Step 3. Starting and Running the process

There a multiple ways to start an instance of the process that was deployed in step 2. For instance you can start one from your own code, the jbpm-console, but perhaps the easiest way is to start on using an out-of-the-box service. For instance when your store front receives the order you can create an EsbMessage with the invoice and drop it on the 'StartProcess' service.

You can define a StartProcess service (one for each process definition) in the jboss-esb.xml in yourservices.esb. For instance it can look like

where we use the 'StartProcessInstanceCommand' to invoke a new process instance of the type 'OrderProcess' and with the invocation we insert a business key (invoice ID) and the invoice itself into the jBPM process instance context. Now when a message hits this service a new instance of the shipping process is created and we can look at its state using the jbpm-console (by default running on http://localhost:8080/jbpm-console)

Figure 4. State of an instance of the 'OrderProcess' Service Orchestration

In Figure 4 the 'Review Order' process is highlighted because the process is waiting for a human to review the order. So here we now have integrated human work flow, see Figure 5!

Figure 5. 'Review Order' Human Work Flow Integration

Conclusion

We demonstrated that it is a three step process to use jBPM for Service Orchestration and how to integrate it with Human Work Flow. To get more details you can read the jBPMIntegrationGuide.

Have fun,

--Kurt

Friday, January 18, 2008

JBoss DNA

Related to our governance story, you should definitely take a look at the new JBoss DNA project.

Thursday, January 17, 2008

Tooling and governance

All good SOA platforms need a good SOA governance solution. Governance covers a number of factors and interacts at different levels of the SOA architecture. For example, there are tooling requirements (displaying the important information, customizing the view on the data, etc.) and infrastructure support (for enforcing SLAs, checking when they are [about to be] violated, etc.) The best governance implementations will perform well across these levels. However, you shouldn't be persuaded just by the gloss of a governance solution: don't judge a book by its cover.

So far we've been concentrating on the low-level infrastructure support for governance, both with JON and more native support within the ESB and other projects. Plus, from the start we've made the registry (UDDI in our case) a central component within the ESB. Plus, the Drools team have been making waves with their BRMS implementation, which is important in governance too. And of course there's the tried and tested jBPM Graphical Process Designer (not directly related to governance, but definitely to tooling) and our friends from MetaMatrix. However, what we haven't been able to do until now is discuss where we are going (both with in-house development as well as with partners).

As well as tools from the individual projects within the SOA Platform, here is a brief synopsis of architectural requirements on SOA-P specific tooling and governance. I don't want to go into details about how these tools (and the necessary supporting infrastructure) are implemented. Depending upon the role of the user or component (more in another posting) it makes more sense for some of them to be Eclipse based, whereas others will be Web console based. There will be some tools that ideally would have representations in both arenas. It's important to realise that some of the capabilities I'm going to mention will have to be duplicated across different tooling environments. For instance, sometimes what a sys admin needs to do is also what a developer needs to do (e.g., inspect a service contract). We won't require the sys admin to have to fire up the developer tool in order to do that. And vice versa.

Governance of SOA infrastructures is critically important. If any of you have tried to manage distributed systems in a local area before, you'll understand how difficult that can often be. Imagine expanding that so it covers different business domains where you (the developer or deployer) do not control all of the underlying infrastructure and cannot work on the assumption that people are trustworthy. With SOA governance, there is a runtime component that executes to ensure things like SLAs are maintained, but there is also a tooling component (runtime for management and design time).

With the JBoss governance/tooling effort, you'll be able to graphically display:

Note, you can do some of this with the current releases!

All of this information may be obtained periodically from a central (though more realistically a federated) data store or direct from the services themselves. However, both sys admins and developers (more of them later) will need to be able to connect to services (and composites) and inspect their governance criteria, e.g., when was the last time they violated a contract, why and under what input messages/state, at will: the dynamic factor is incredibly important. This information needs to be made available across individual services as well as the entire SOA-P deployment.

Going back to what I mentioned earlier about governance. We're working on a separate and dedicated governance console that is used to receive alarms/warnings when contracts/SLAs are violated or close to being violated. Obviously the console is only one such destination for these alerts: sys admin inboxes are just as important. However, that's where the infrastructure comes into play: remember that JBossESB has a very flexible architecture.

Traditional management tooling (e.g., via JMX) would include:

Design time tooling includes:

Service implementation tool:

Some things that I have not had a chance to write up yet include roles and relationships between roles, and (related) security and ACLs. Task definitions (lots of notes on that). Diagrams illustrating the kinds of things I want to see. So expect more later.

So far we've been concentrating on the low-level infrastructure support for governance, both with JON and more native support within the ESB and other projects. Plus, from the start we've made the registry (UDDI in our case) a central component within the ESB. Plus, the Drools team have been making waves with their BRMS implementation, which is important in governance too. And of course there's the tried and tested jBPM Graphical Process Designer (not directly related to governance, but definitely to tooling) and our friends from MetaMatrix. However, what we haven't been able to do until now is discuss where we are going (both with in-house development as well as with partners).

As well as tools from the individual projects within the SOA Platform, here is a brief synopsis of architectural requirements on SOA-P specific tooling and governance. I don't want to go into details about how these tools (and the necessary supporting infrastructure) are implemented. Depending upon the role of the user or component (more in another posting) it makes more sense for some of them to be Eclipse based, whereas others will be Web console based. There will be some tools that ideally would have representations in both arenas. It's important to realise that some of the capabilities I'm going to mention will have to be duplicated across different tooling environments. For instance, sometimes what a sys admin needs to do is also what a developer needs to do (e.g., inspect a service contract). We won't require the sys admin to have to fire up the developer tool in order to do that. And vice versa.

Governance of SOA infrastructures is critically important. If any of you have tried to manage distributed systems in a local area before, you'll understand how difficult that can often be. Imagine expanding that so it covers different business domains where you (the developer or deployer) do not control all of the underlying infrastructure and cannot work on the assumption that people are trustworthy. With SOA governance, there is a runtime component that executes to ensure things like SLAs are maintained, but there is also a tooling component (runtime for management and design time).

With the JBoss governance/tooling effort, you'll be able to graphically display:

MTTF/MTTR information on behalf of specific nodes and services on those nodes. Also for all nodes and services that are deployed.- throughput for services.

- time taken to process specific types of messages (e.g., how long to do transformations, how long to do transformations on behalf of user X).

- number of requests services during the lifetime of the service/node (in general, it is always important to distinguish between services and the nodes on which they execute).

- number of faults (service/node) in a given duration.

- information about where messages are being received.

- information about where messages are being sent (responses as well as faults).

- potential dependency tracking data. This can be used to determine sources of common failure. Can also be used when deciding whether or not (and where) to migrate services, for improved availability or performance.

- what versions of services exist within a specific process (VM).

- Includes sending probe messages that can test availability and performance on request. However, this functionality needs to be duplicated into the design-time tooling.

Note, you can do some of this with the current releases!

All of this information may be obtained periodically from a central (though more realistically a federated) data store or direct from the services themselves. However, both sys admins and developers (more of them later) will need to be able to connect to services (and composites) and inspect their governance criteria, e.g., when was the last time they violated a contract, why and under what input messages/state, at will: the dynamic factor is incredibly important. This information needs to be made available across individual services as well as the entire SOA-P deployment.

Going back to what I mentioned earlier about governance. We're working on a separate and dedicated governance console that is used to receive alarms/warnings when contracts/SLAs are violated or close to being violated. Obviously the console is only one such destination for these alerts: sys admin inboxes are just as important. However, that's where the infrastructure comes into play: remember that JBossESB has a very flexible architecture.

Traditional management tooling (e.g., via JMX) would include:

- start/stop a service.

- suspend/resume a service.

- add/update restriction lists for services. This limits the list of receivers that a service considers valid and will process messages from. A similar list of destinations for responses will exist. This plays into the role/relationship concept because although a developer may not consider the issue of security (maybe can't, given that services could be deployed into environments that did not exist when the developer was building the service), the sys admin (or service container admin) will have to.

- migrate services (and potentially dependent services).

- inspect service contract.

- update service definition.

- attach and tune specific service parameters.

Design time tooling includes:

- defining the service definition/contract, which includes specifying what message types it allows. This is tied into the service implementation in order that the ESB infrastructure can verify incoming messages against this contract for validity. Part of the contract will also include security and role information which will define who can interact with the service (may be fine grained based on time of day, specific message type, etc.) Policies are attached at this level on a per service or per operation basis (if not defined on an operation basis, the service level policy is taken if defined).

- policy definition/construction, tracking and enforcement. Not just part of the development tool, but also an integral part of the underlying ESB infrastructure. Policies need to be shared so that other developers can utilise them in their own service construction. Typically these will be stored in the repository.

- service construction from other services, i.e., composite services. This has an input on SLA and on governance enforcement. In some cases a physical instance of the service may not exist either and the infrastructure becomes responsible for imposing the abstraction of a service by directing interactions accordingly.

- inspecting the registry and repository during design time to locate and inspect desired services for composition within applications. Also ties into runtime management so that the user can inspect all running services. This would also tie into our graphical process flow tool, by allowing a drag-and-drop approach to application construction.

- service development then into service deployment. The tool will allow the user to view a list of available nodes and processes. The availability, performance etc. of those nodes will also be displayed (more tooling and infrastructure support). Then you can drag a service implementation on to the node and deploy it, either dynamically or statically. This ties into the runtime management tool that allows the user to view deployed services on nodes (see previously).

Service implementation tool:

- from the client perspective you go to the registry and select the right service based on requirements (functional as well as non-functional). The associated contract defines the message format, as mentioned earlier. The tool will either auto-generate an appropriate stub for the client code or provide a way of tying state variables (from the application code, or incoming messages etc.) in to the outbound invocations on the service. Fairly obviously we cannot prevent developers from ignoring the design-time tools and coding their applications without that support, which is really where the need for stubs comes in. At the tooling level, we're really looking at defining client tasks that the developer writes and defines the output instance variables that are hooked into the service's input variables. As far as the client tool is concerned, we are simply tying together these variables. Under the cover, the tool generates the right set of service-specific messages. The ESB infrastructure sends and receives and dispatches accordingly. Capabilities such as security and transactions may be exposed to the client.

- from the service developer perspective, we are defining services as compositions of tasks, actions, dispatchers etc. In the graphical designer we specify the input variables that are required for each operation type (defined as specific messages). This also plays into the contract definition effort mentioned earlier, since the message formats accepted by a service are implicitly defined by the requirements on input state variables.

- there's a separate discussion to be had about the term "task". The OPENflow work that originated within Arjuna can help here.

- WS-CDL tooling here as well.

- the need to be able to deploy services into a virtual environment to allow it to be tested without affecting a running system. A service has to be able to be deployed in a test mode. What this means is that at a minimum the service is not available to arbitrary users. Test services should also not be deployed into a running process/container that is being used by other (non-test) services and applications in case they cause it to fail and, worst case scenario, take the entire process with them.

Some things that I have not had a chance to write up yet include roles and relationships between roles, and (related) security and ACLs. Task definitions (lots of notes on that). Diagrams illustrating the kinds of things I want to see. So expect more later.

Monday, January 14, 2008

Wednesday, January 9, 2008

Making SOA a science and not an art

Over 5 years ago, around the time BPEL4WS came out, WSCI was released. Because this was just at the peak of the IBM/MSFT versus everyone else wars the uneducated observer saw this as yet another attempt by Oracle et al to fight the beast. However, WSCI was a far different type of animal. It went to W3C under the guise of the WS-Choreography group and eventually WS-CDL was born. WS-CDL is one of those standards that I've been observing from a far: I was involved at the periphery in the early years of WS-T and OASIS WS-CAF, because of the influence of transactions (and context), but apart from being on the mailing list I've had no further input. But WS-CDL is also one of the nicest, most powerful and most under-rated standards around. So although I've been watching from a far, I've also been waiting for the right moment to use its power in whatever way makes sense.

In Arjuna there were limited opportunities for this. I tried with a few of our engagements, but the "Why do I want this when there's BPEL?" question kept coming up. As a result in some ways WS-CDL is also one of the most frustrating standards: you really want to shake the people who ask those questions and force them to read up on WS-CDL rather than believe the anti-publicity. I also wish that some of the bigger players involved in its development had done a lot more. Oh well, such is life in the standards world.

However, once I joined JBoss and was put in charge of the JBossESB development, things started to change. Here was a great opportunity! Here was a distributed system that was under development, would use Web Services and SOA at its heart, would be about large scale (size of participants and physical locality) and where you simply did not control all of the infrastructure over which applications ran.

Now many people in our industry ignore formal methods or pay lip service to them, believing they are only of use to theoreticians. Unfortunately until that changes Computer Science will always be a "soft" science: more an art than anything. That's not a good thing because it limits efficiency. In a local application (everything on one machine) you can get away with cutting some corners. But in a distributed system, particularly one that needs to be fault tolerant, it's worse. For example, how do you prove the correctness of a system when you cannot reason about the ways in which the individual components (or services) will act given specific expected (or unexpected) stimuli? Put another way, how can you ensure that the system behaves as expected and continues to do so as it executes, especially if it has non-deterministic properties? As the complexity of your application increases, this problem approaches being NP-complete.

Rather than just throwing together an "architecture" diagram and developing services in relative isolation, and trusting to luck (yes, that's often how these things are developed in the real world), we decided that something better had to exist for our customers. Now there are formal ways of doing this using PetriNets, for example. WS-CDL uses Pi-Calculus to help define the structure of your services and composite application; you can then define the flow of messages between them, building up a powerful way in which to reason effectively about the resultant. On paper the end result is something that can be shown to be provably correct. And this is not some static, developer-time process either. Because these "contracts" between services work in terms of messages and endpoints, you can (in theory) develop runtime monitoring that enhances your governance solution and is (again) provably correct: not only can you reason successfully about your distributed system when it is developed and deployed initially, but you can continue to do so as it executes. A good governance solution could tie into this and be triggered when the contract is violated, either warning users or preventing the system from making forward progress (always a good thing if a mission-critical environment is involved).

Now this is complex stuff. It's not something I would like to have to develop from scratch. Fortunately for JBoss (now Red Hat), there's a company that develops software that is based on WS-CDL: Hattrick Software, principle sponsors of the Pi4 Technologies Foundation. Fortunately again, one of the key authors of the standard (my friend/colleague Steve Ross-Talbot) is both CTO of Hattrick Software and Chair of the Pi4 Technologies Foundation. Steve has been evangelizing WS-CDL for years, pushing hard against those doors that have "Closed: Using BPEL. Go Away." signs on them. We're starting to see the light as users see the deficiencies of BPEL (the term "Use the right tool for the right job" keeps coming to mind) and look for solutions. But it's been a long, slow process. But when like minds come together, sometimes "something wonderful" can happen. People at Hattrick have been working with us at Red Hat for several months, to integrate their product (which is open source and lets you develop scenarios to test out your deployments and then monitor them for correctness once they go live) with JBossESB and the SOA Platform. This work is almost complete and it will offer a significant advantage to both companies customers.

I can't really go into enough detail here to show how good this combination is, but here are a few example images. The example scenario is a distributed auction, with participants including a seller and several buyers. With the WS-CDL tooling you can define your scenarios (the interactions between parties) like this

You can then use the tool to define the roles and relationships for your application:

And then you can dive down into very specific interactions such as credit checking:

Or winning the auction:

We're hoping to offer a free download of the Hattrick Software for the SOA Platform in the new year, and maybe even for the ESB. Since it's all Eclipse based, it should be relatively straightforward to tie this into our overall tooling strategy as well, providing a uniform approach to system management and governance. But even without this, what this combination offers is very important: you can now develop your applications and services and prove they work before deployment. Furthermore, in the SOA world of service re-use, where you probably didn't develop everything, a suitable WS-CDL related contract for each service should allow developers to re-use services in a more formal manner and prove a priori that the composite application is still correct, rather than doing things in the ad hoc manner that currently pervades the industry.

In Arjuna there were limited opportunities for this. I tried with a few of our engagements, but the "Why do I want this when there's BPEL?" question kept coming up. As a result in some ways WS-CDL is also one of the most frustrating standards: you really want to shake the people who ask those questions and force them to read up on WS-CDL rather than believe the anti-publicity. I also wish that some of the bigger players involved in its development had done a lot more. Oh well, such is life in the standards world.

However, once I joined JBoss and was put in charge of the JBossESB development, things started to change. Here was a great opportunity! Here was a distributed system that was under development, would use Web Services and SOA at its heart, would be about large scale (size of participants and physical locality) and where you simply did not control all of the infrastructure over which applications ran.

Now many people in our industry ignore formal methods or pay lip service to them, believing they are only of use to theoreticians. Unfortunately until that changes Computer Science will always be a "soft" science: more an art than anything. That's not a good thing because it limits efficiency. In a local application (everything on one machine) you can get away with cutting some corners. But in a distributed system, particularly one that needs to be fault tolerant, it's worse. For example, how do you prove the correctness of a system when you cannot reason about the ways in which the individual components (or services) will act given specific expected (or unexpected) stimuli? Put another way, how can you ensure that the system behaves as expected and continues to do so as it executes, especially if it has non-deterministic properties? As the complexity of your application increases, this problem approaches being NP-complete.

Rather than just throwing together an "architecture" diagram and developing services in relative isolation, and trusting to luck (yes, that's often how these things are developed in the real world), we decided that something better had to exist for our customers. Now there are formal ways of doing this using PetriNets, for example. WS-CDL uses Pi-Calculus to help define the structure of your services and composite application; you can then define the flow of messages between them, building up a powerful way in which to reason effectively about the resultant. On paper the end result is something that can be shown to be provably correct. And this is not some static, developer-time process either. Because these "contracts" between services work in terms of messages and endpoints, you can (in theory) develop runtime monitoring that enhances your governance solution and is (again) provably correct: not only can you reason successfully about your distributed system when it is developed and deployed initially, but you can continue to do so as it executes. A good governance solution could tie into this and be triggered when the contract is violated, either warning users or preventing the system from making forward progress (always a good thing if a mission-critical environment is involved).

Now this is complex stuff. It's not something I would like to have to develop from scratch. Fortunately for JBoss (now Red Hat), there's a company that develops software that is based on WS-CDL: Hattrick Software, principle sponsors of the Pi4 Technologies Foundation. Fortunately again, one of the key authors of the standard (my friend/colleague Steve Ross-Talbot) is both CTO of Hattrick Software and Chair of the Pi4 Technologies Foundation. Steve has been evangelizing WS-CDL for years, pushing hard against those doors that have "Closed: Using BPEL. Go Away." signs on them. We're starting to see the light as users see the deficiencies of BPEL (the term "Use the right tool for the right job" keeps coming to mind) and look for solutions. But it's been a long, slow process. But when like minds come together, sometimes "something wonderful" can happen. People at Hattrick have been working with us at Red Hat for several months, to integrate their product (which is open source and lets you develop scenarios to test out your deployments and then monitor them for correctness once they go live) with JBossESB and the SOA Platform. This work is almost complete and it will offer a significant advantage to both companies customers.

I can't really go into enough detail here to show how good this combination is, but here are a few example images. The example scenario is a distributed auction, with participants including a seller and several buyers. With the WS-CDL tooling you can define your scenarios (the interactions between parties) like this

You can then use the tool to define the roles and relationships for your application:

And then you can dive down into very specific interactions such as credit checking:

Or winning the auction:

We're hoping to offer a free download of the Hattrick Software for the SOA Platform in the new year, and maybe even for the ESB. Since it's all Eclipse based, it should be relatively straightforward to tie this into our overall tooling strategy as well, providing a uniform approach to system management and governance. But even without this, what this combination offers is very important: you can now develop your applications and services and prove they work before deployment. Furthermore, in the SOA world of service re-use, where you probably didn't develop everything, a suitable WS-CDL related contract for each service should allow developers to re-use services in a more formal manner and prove a priori that the composite application is still correct, rather than doing things in the ad hoc manner that currently pervades the industry.

Subscribe to:

Posts (Atom)